Blog ENG

What do AI-generated images look like?

Short answer – (almost) whatever you want them to. With enough luck and a big enough number of attempts of course. The long answer starts with a short explanation of how text-to-image generation works these days. Or you can just scroll a little lower and see some examples.

Diffusion models

Diffusion is a novel approach to the problem of generative models that has been an underlying factor in the success of all of the recently created text-to-image generation models. An explanation of the process, quoted from the original paper, goes like this:

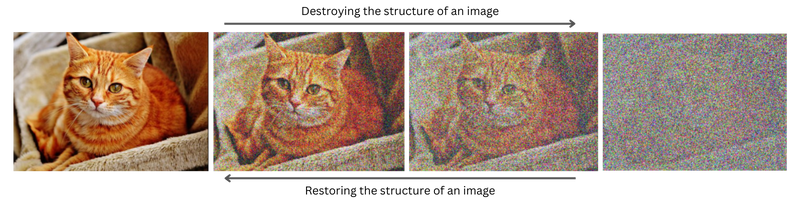

The essential idea, inspired by non-equilibrium statistical physics, is to systematically and slowly destroy structure in a data distribution through an iterative forward diffusion process. We then learn a reverse diffusion process that restores structure in data, yielding a highly flexible and tractable generative model of the data.

The idea is not easy, but the details of the process and its statistical properties that make it so powerful are not something to wrap your mind around in a matter of one blog post. That is why we are gonna do the fun thing and skip it; after three more sentences and an image. A simple depiction of the forward diffusion idea is shown in the image below. The task of the reverse diffusion would be to restore the image, and learning happens in the process. This explanation surely lacks some important information, but it clears up some confusion about the diffusion process.

Text and DALL-E

The model that started the new wave in the field of text2image generation is DALL-E, made by OpenAI. It uses a transformer encoder-decoder architecture to encode text and then decode an image from given text. A normal, even a low-resolution image contains a little bit too much information to be decoded (generated) easily so there is an added step between the decoder output and the real image output. This step is where the diffusion comes in, not in DALL-E, but in DALL-E 2 to take away the show.

The diffusion approach lifted DALL-E 2 to a whole new level, and if it wasn’t done by OpenAI with DALL-E 2 it would probably be done by any other of the diffusion-based models created around the same time by their competition. The use of diffusion motivated the architecture of image generation models to evolve. Combining text embeddings with the noise in the image restoration process is the first step in making the diffusion of textually specified images possible.

But connecting the text and image data is not that easy, so there is another model used in the process to give more context about the image we want to generate. CLIP, also developed by OpenAI to choose the correct caption of an image between a large number of choices, learns the similarities between the captions and the images. And CLIP’s latent features are used to connect the textual and visual semantics.

An important aspect of the model that wasn’t mentioned yet, the data DALLE-2 was trained on, is a dataset of millions of image-caption pairs. So this was another short story about something very complex and it also lacks some accuracy in favor of not giving you a proper headache.

Finally, the fun part

In the last passage, it was mentioned that OpenAI and DALL-E 2 have some competition. So let’s take a look and see who we are talking about. We will start by introducing each of the solutions, giving you information on how to try it, and end by showing some of the images generated by it.

It is worth noting that all of the models output multiple images for a given text prompt. And they allow the option of regenerating with the same prompt, which should yield a different set of images every time. So to get a good impression the best and the worst yielded image (subjectively selected by the writer of this post) will be displayed for some text prompts.

So let’s meet the contestants.

Stable Diffusion

Developed by Stability.AI, Stable Diffusion has recently had a public release. There is an online environment where it can be tested on the Huggingface platform. Unfortunately, the platform is mostly busy as a result of a large number of requests. Stable Diffusion is also available in a beta version of the desktop application DreamStudio, with the Lite version also being available in the browser. That brings us to Stable Diffusion’s most interesting feature. It can run on consumer-grade GPUs, needing only around 10 GBs of VRAM. API options are still not available.

Let’s start with the examples. Since the official public testing space on the Huggingface platform is always busy, the alternative has been found on the Lexica platform, “a Stable Diffusion search engine”. Lexica has already generated images in its database and allows the generation of an unlimited amount of images at no charge.

Prompt: “sunset with 3 suns, mountains in the background”

Unfortunately, the concept of three suns has not been captured, but we see that the model generated some nice sunset pictures in the best case. In the worst case, we see a level of misunderstanding, where one of the images does not have a sun at all (image 3). The number 3 in the prompt did not influence the generated image until it was written as a word, where it generated three creatures in the best case (image 4). For that reason, the next prompt was selected more conservatively with some additional prompt variations.

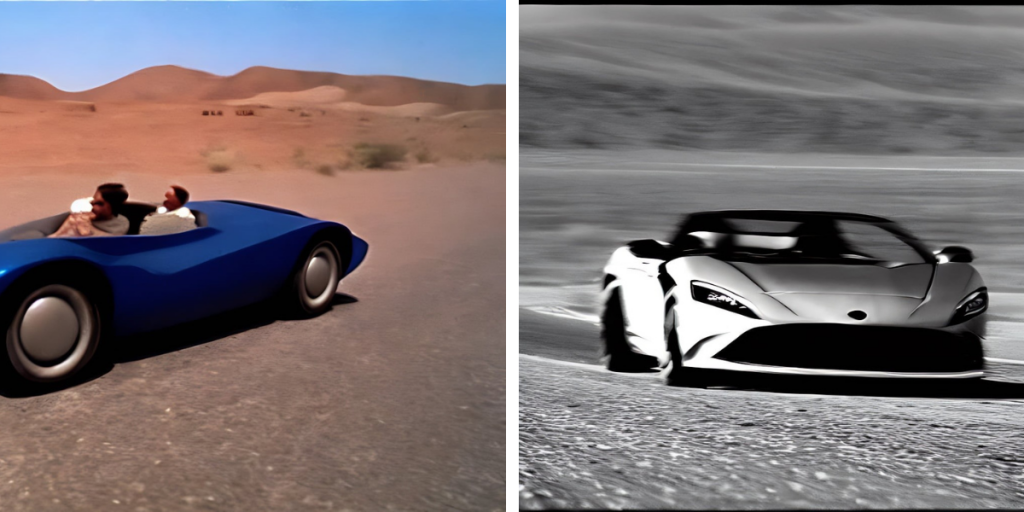

Prompt: sports car driving through the desert

Except for the lack of the car in one image, and some bad perspective, there are no real complaints about the generated images. Scenery looks a little bit like a video game in all of them, so some prompt modifications are introduced.

Prompt: sports car driving through the desert, 2D digital art

Prompt: sports car driving through the desert, analog photograph

Midjourney

Midjourney is a model developed by Midjourney, an independent research laboratory. Not too much information can be found about the model itself, the architecture, the dataset, etc. Midjourney is accessed through Discord (freeware VoIP application designed for the gaming community). By joining the Midjourney’s Discord channel through their website you enter a community of people constantly generating new images. And through a simple command using the Midjourney’s discord bot, you can start generating some images yourself. Unfortunately, the fun of the trial version ends after generating 25 prompts. After that, the cheapest option is 10$/month with a limit of generating around 200 queries/month.

The way the Discord bot works is that it generates 4 images for a basic prompt. Then it offers an option of upscaling or generating 4 variations for each of the 4 images, as well as the option to regenerate the 4 images from the starting prompt. This is displayed in the next example.

All the concepts seem fine considering the abstractness this prompt allows. All the images look more artistic than realistic, and for that reason, “hyperrealistic” was added to the prompt, but without much change. Variations look good, the original concept is kept and the details are changed. Upscaled images become more detailed, but they still look a little bit vague.

Prompt: “space ship flying over pyramids”

An example of an upscaled image, with the addition of “hiperrealistic” into the prompt:

Prompt: “planet earth inside of a snowball, by the window, mountains in the background”

An example of a relatively abstract prompt shows some issues in understanding certain concepts. The lower left example is the only one that shows a degree of understanding of the “by the window” part of the prompt, while none of the examples explicitly show planet Earth. It is worth noting that this prompt could be formed significantly better and that the concept of planet earth inside of a snowball is not an easy image generation task for many reasons. The end result of the prompt is very interesting despite the aforementioned problems.

DALL-E 2

Already described DALL-E 2, developed by OpenAI and available on their website or through an API. The first 50 prompts (4 images per prompt) are free, after which you can buy 115 more for 15$.

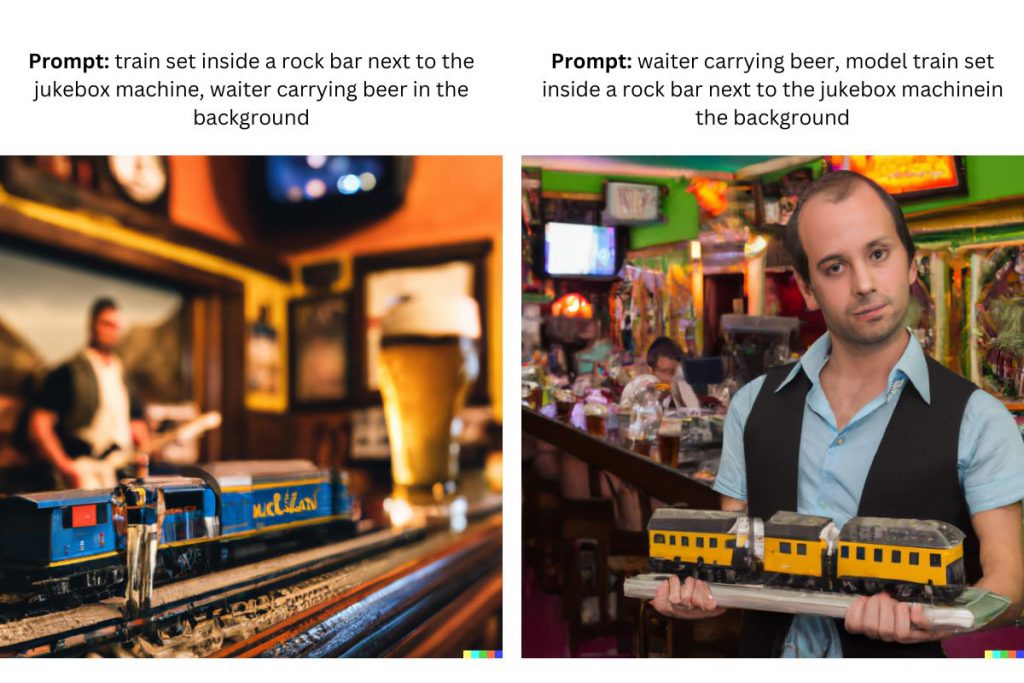

And here are the examples. Each prompt was regenerated once, and the most interesting examples for the most interesting prompts were selected. During this experiment DALL-E demonstrated a good understanding of some concepts and an ability to fulfill most tasks in a detailed prompt. The conclusion was that to achieve good results with DALL-E 2, a higher level of prompt specificity is required. And playing with more complex and specific prompts can lead to fascinating results.

Prompt: river in a rainforest

Prompt: river in a rainforest from above

The two prompts shown above demonstrate the understanding capability and the aforementioned need for precise descriptions, with the addition of “from above” to the prompt changing the perspective completely for all of the generated images. Also, the last example shows an image that looks rendered, as opposed to all the other generated realistic images.

Prompt: a record player standing on a table next to a money tree plant in a white modern living room, records standing on the shelf above

The concept of a shelf with records standing on it has not been shown in any of the generated images. Nevertheless, the plant in the first image looks like the one specified in the prompt and the rest of the image fits the description. In the last image, the record player and the plant look a little toy-like.

After these short analyses, some interesting prompt examples will be displayed, leaving the analysis to the reader and containing only the prompt and the image.

Conclusion…

After a long journey of prompts and images, the only thing left to say is that the best way to see how this works is to try it. It’s also quite fun and in some cases you get to join a community of people prompting, generating, and exploring the abilities of state-of-the-art AI. Have fun 🙂

Literature:

- The recent rise of diffusion-based models, Maciej Domagała, https://deepsense.ai/the-recent-rise-of-diffusion-based-models/

- Deep Unsupervised Learning using Nonequilibrium Thermodynamics, Sohl-Dickstein et al., https://arxiv.org/abs/1503.03585

- Zero-Shot Text-to-Image Generation, Ramesh et al., https://arxiv.org/abs/2102.12092

- CLIP: Connecting Text and Images, OpenAI blog (multiple authors)., https://openai.com/blog/clip/

- How DALL-E 2 Actually Works, Ryan O’Connor, https://www.assemblyai.com/blog/how-dall-e-2-actually-works/

- Stable Diffusion Launch Announcement, https://stability.ai/blog/stable-diffusion-announcement